EarSpy attack eavesdrops on Android phones via motion sensors

A team of researchers has developed an eavesdropping attack for Android devices that can, to various degrees, recognize the caller’s gender and identity, and even discern private speech.

Named EarSpy, the side-channel attack aims at exploring new possibilities of eavesdropping through capturing motion sensor data readings caused by reverberations from ear speakers in mobile devices.

EarSpy is an academic effort of researchers from five American universities (Texas A&M University, New Jersey Institute of Technology, Temple University, University of Dayton, and Rutgers University).

While this type of attack has been explored in smartphone loudspeakers, ear speakers were considered too weak to generate enough vibration for eavesdropping risk to turn such a side-channel attack into a practical one.

However, modern smartphones use more powerful stereo speakers compared to models a few years ago, which produce much better sound quality and stronger vibrations.

Similarly, modern devices use more sensitive motion sensors and gyroscopes that can record even the tiniest resonances from speakers.

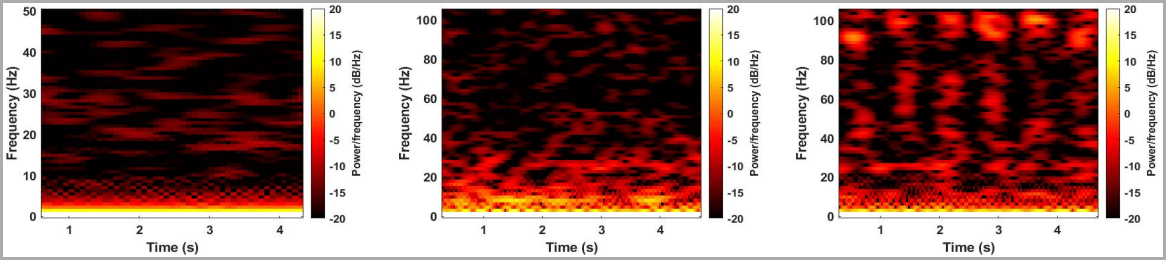

Proof of this progress is shown below, where the earphone of a 2016 OnePlus 3T barely registers on the spectrogram while the stereo ear speakers of a 2019 OnePlus 7T produce significantly more data.

source: (arxiv.org)

Experiment and results

The researchers used a OnePlus 7T and OnePlus 9 device in their experiments, along with varying sets of pre-recorded audio that was played only through the ear speakers of the two devices.

The team also used the third-party app ‘Physics Toolbox Sensor Suite’ to capture accelerometer data during a simulated call and then fed it to MATLAB for analysis and to extract features from the audio stream.

A machine learning (ML) algorithm was trained using readily available datasets to recognize speech content, caller identity, and gender.

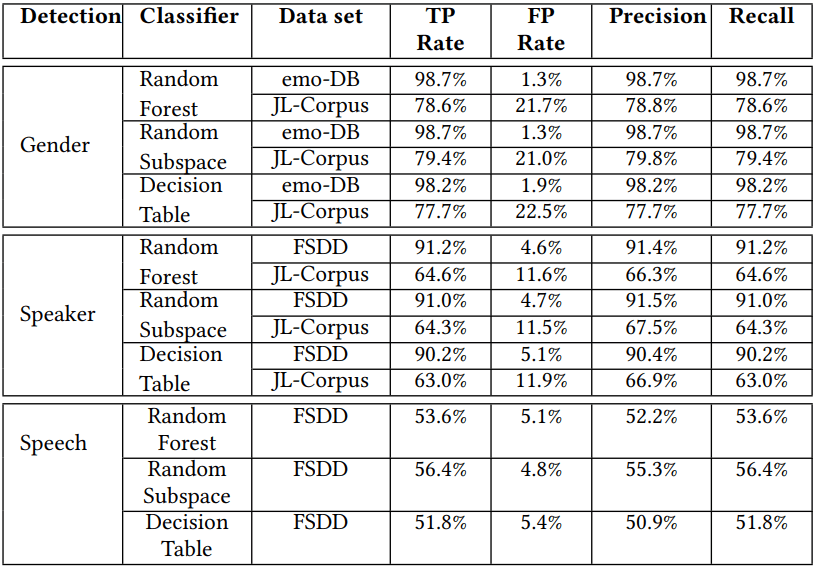

The test data varied depending on the dataset and device but it produced overall promising results for eavesdropping via the ear speaker.

Caller gender identification on OnePlus 7T ranged between 77.7% and 98.7%, caller ID classification ranged between 63.0% and 91.2%, and speech recognition ranged between 51.8% and 56.4%.

“We evaluate the time and frequency domain features with classical ML algorithms, which show the highest 56.42% accuracy,” the researchers explain in their paper.

“As there are ten different classes here, the accuracy still exhibits five times greater accuracy than a random guess, which implies that vibration due to the ear speaker induced a reasonable amount of distinguishable impact on accelerometer data” – EarSpy technical paper

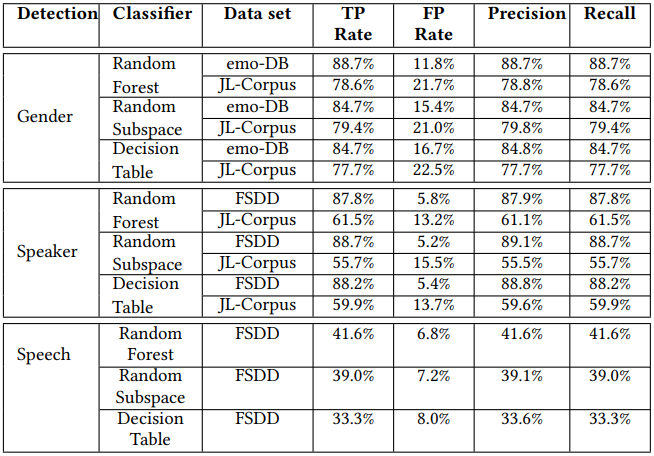

On the OnePlus 9 device, the gender identification topped at 88.7%, identifying the speaker dropped to an average of 73.6%, while speech recognition ranged between 33.3% and 41.6%.

Using the loudspeaker and the ‘Spearphone’ app the researchers developed while experimenting with a similar attack in 2020, caller gender and ID accuracy reached 99%, while speech recognition reached an accuracy of 80%.

Limitations and solutions

One thing that could reduce the efficacy of the EarSpy attack is the volume users choose for their ear speakers. A lower volume could prevent eavesdropping via this side-channel attack and it is also more comfortable for the ear.

The arrangement of the device’s hardware components and the tightness of the assembly also impact the diffusion of speaker reverberation.

Finally, user movement or vibrations introduced from the environment lower the accuracy of the derived speech data.

Android 13 has introduced a restriction in collecting sensor data without permission for sampling data rates beyond 200 Hz. While this prevents speech recognition at the default sampling rate (400 Hz – 500 Hz), it only drops the accuracy by about 10% if the attack is performed at 200 Hz.

The researchers suggest that phone manufacturers should ensure sound pressure stays stable during calls and place the motion sensors in a position where internally-originating vibrations aren’t affecting them or at least have the minimum possible impact.

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on Patreon using the button below

To keep up to date follow us on the below channels.